Putting AI Coaching and Practice to the Test

Overview

Sales division leadership wanted to explore sales enablement platforms that could strengthen coaching and skill practice while fitting into Salesforce workflows. The work centered on evaluating AI enabled capabilities in a way that tested adoption risk, not just feature depth. After meeting with multiple vendors, two finalists moved forward into proof of concept builds tied to real sales scenarios. A 30-person pilot with regional sales and account executives provided practical usage and feedback signals. The pilot results were analyzed and summarized for leadership, resulting in a recommendation to not move forward at that time.

*Some details of this project and internal materials are confidential. This case study focuses on my role, process, and outcomes; specifics have been generalized and no internal content is shown.

Background and Problem Context

Raising performance consistency and skill building was a key goal of sales leadership. Their sales team spanned both national and regional segments with sales executives spread across multiple locations. Sales executives currently used Salesforce, however training, coaching, practice, and just in time reinforcement lived outside that workflow, which made it harder to build shared habits. Combined with inconsistent practices for onboarding and manager enablement further contributed to uneven coaching and reinforcement. As a result, how sales executives showed up as product ambassadors varied by individual and manager. This affected how the value story on company differentiation was delivered in broker conversations.

Broker feedback reinforced that leading with the organization’s value, clearly and confidently, was a differentiator. The project started from a practical premise: if coaching and practice support could live where sales executives already work, strengthened by AI enabled tools, adoption would be more realistic and performance support could become part of the flow of work.

Audience

The target audience for this pilot were team members who would use the platform day to day, plus the leaders responsible for performance outcomes across the sales organization.

- Sales executives (regional and national): Needed fast, reliable access to selling resources in the flow of work, along with structured ways to practice and improve how they showed up in broker and client conversations.

- Team managers: Needed a consistent way to coach and reinforce expectations, support practice without adding heavy lift, and see usage and performance signals that helped them target follow up.

- Sales leadership (stakeholders): Head of sales plus regional and national sales leaders who set the priorities for the evaluation and owned the investment decision.

Together, these groups ensured the evaluation stayed grounded in day to day workflow realities while still answering the strategic questions leadership needed to make a platform decision.

Objectives

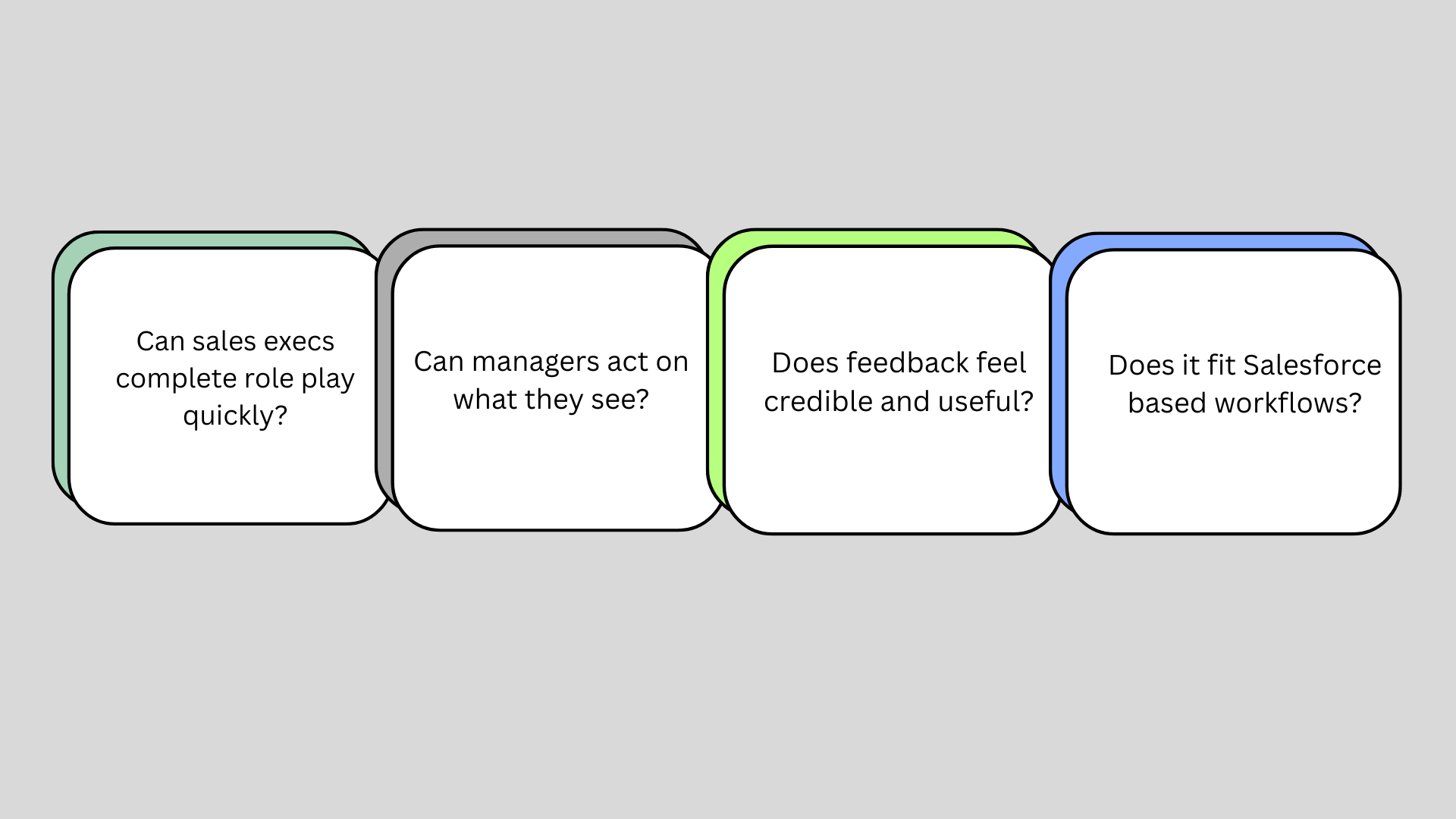

The pilot was designed to answer a practical question: would AI enabled coaching and role play feel easy enough to use, and meaningful enough, that sales executives and managers would choose it in the middle of real work. The focus was not on perfecting a curriculum. It was on testing whether the experience supported realistic practice, delivered feedback participants could apply, and fit naturally into existing sales routines rather than adding another layer of effort.

To keep the pilot grounded, the objectives were straightforward:

- Assess ease of use for sales executives completing AI role play and practice activities.

- Evaluate whether participants found the coaching experience meaningful, credible, and worth repeating.

- Understand manager usefulness, including whether results were clear enough to guide follow up coaching.

- Identify workflow friction points that would impact adoption at scale, including how well the experience fit alongside Salesforce based work.

Assets I developed

To support a fair evaluation and a realistic pilot, I built a set of connected assets that made it possible to test both platforms using the same use case and report results clearly to sales leadership:

- Pilot practice experience (value story) with AI role play

Co-designed with a team member, vendors, and sales leaders so participants practiced a realistic sales conversation and received AI enabled feedback. - Pilot communications and support channel

Created the kickoff and participation guidance for the pilot. Set up a dedicated channel we monitored to answer questions during the pilot window. - Platform comparison tables

Built side by side comparisons focused on workflow fit and ease of use. Documented the effort required to build and maintain AI role play activities. - Pilot feedback survey

Designed a short survey to capture ease of use, perceived usefulness, credibility of feedback, and likelihood of repeat use. - Leadership readout deck and recommendation summary

- Delivered a decision ready readout tying pilot signals to feasibility. Documented the no go recommendation and a plan to revisit in six months.

Process Journey

March to April 2024

March and April set the direction for the entire effort. Work began with sales leadership to translate interest in AI coaching into clear objectives aligned to sales strategy, and to define what success would need to look like for sales executives in real conversations. With that shared definition in place, vendor demos were scheduled as a learning step. Those early conversations clarified what was realistic and helped narrow the field to the vendors worth deeper evaluation.

May to June 2024

As vendors were compared more closely, the evaluation approach was shaped so platforms could be assessed using the same expectations and the same use case. This is where Allego and Seismic emerged as the two finalists for deeper testing. Throughout this phase, updates were shared with sales leaders weekly to keep the work transparent and decisions grounded.

July to August 2024

Proof of concept work ramped up with both vendors. I led the project and served as the main point of contact between the vendors, my team, and sales division leaders. In partnership with a team member and sales leaders, the value story practice lesson was built using AI role play so the pilot would test a realistic coaching experience.

September to October 2024

These months were focused on participant communication and making adjustments based on what we were seeing as the experience took shape. I led and owned communication from end to end, including guidance for participants and a dedicated support channel monitored for questions. Weekly updates continued so sales leaders had visibility into what was changing and why.

November 2024

Pilot Wave 1 ran in November. Feedback was collected through the pilot survey with attention on ease of use and whether the experience felt meaningful enough to repeat.

December 2024

Pilot Wave 2 ran in December to confirm patterns and reduce decision risk. Findings were consolidated into a leadership readout and recommendation debrief. The recommendation was to pause and revisit in six months once vendor AI roadmaps were further along.

Results and Impact

Over the eight month evaluation, pilot participant feedback were evaluated into a clear view of what was working, what was not, and what it would take to make a platform like this viable.

Mixed Engagement

Participation and repeat use varied across the pilot group, which reinforced that the experience needed to feel immediately useful to compete with day to day priorities.

Platform fit

Allego leaned more video based, which limited flexibility for structured practice and reinforcement. Seismic functioned more like an LMS and supported AI role play, but the record and submit workflow and AI scored rubric were not intuitive for many participants.

Ease of use differences and limited AI value

One platform felt easier to navigate, but neither delivered AI role play and coaching capabilities that were strong enough to create a meaningful advantage for sales executives or team managers.

Operational lift

In both platforms, creating AI role plays required hands on vendor involvement and was not easy to maintain. Even with AI avatars and rubric based evaluation, the feedback did not translate into enough actionable value to justify the ongoing effort.

The pilot closed with a recommendation debrief to department heads that connected usability, field feedback, and sustainability considerations. The work created a grounded baseline for what the sales organization would need from a platform like this to make coaching and practice worth the effort. The recommendation was to pause and revisit the evaluation in six months once vendor AI roadmaps matured.

Lessons Learned

The biggest lesson from this work was that “easy to use” is not the same as “worth using.” The pilot helped separate novelty from value by testing whether AI coaching feedback actually supported better practice, and whether managers could use the results in a way that improved coaching, not just reporting. It also reinforced how important it is to evaluate these tools in real workflows. What looked polished in demos shifted quickly once sales executives tried to use it alongside day to day priorities.

A second takeaway was the operational reality of AI role play. Both platforms required more hands on vendor involvement to build and maintain scenarios than expected, which raised sustainability concerns early. That insight became just as important as the participant experience, because even a strong tool will struggle if it cannot be supported without heavy lift.

Finally, the project underscored the value of steady stakeholder communication during long evaluations. Weekly updates created shared visibility across sales leaders and kept the team aligned on what we were learning as the pilot progressed. The closeout debrief made it possible to land a no go recommendation with clarity and confidence, while still leaving the door open to revisit the market once vendor roadmaps mature.